inspector-apm / neuron-ai

The AI Agent Development Kit for PHP.

Installs: 74 974

Dependents: 7

Suggesters: 0

Security: 0

Stars: 1 050

Watchers: 19

Forks: 109

Open Issues: 2

Requires

- php: ^8.1

- guzzlehttp/guzzle: ^7.9

- inspector-apm/inspector-php: ^3.15.10

- psr/http-message: ^1.0|^2.0

- psr/log: ^1.0|^2.0|^3.0

Requires (Dev)

- ext-pdo: *

- aws/aws-sdk-php: ^3.209

- doctrine/orm: ^2.20

- elasticsearch/elasticsearch: ^8.0

- friendsofphp/php-cs-fixer: ^3.75

- html2text/html2text: ^4.3

- monolog/monolog: ^3.9

- php-http/curl-client: ^2.3

- phpstan/phpstan: ^2.1

- phpunit/phpunit: ^9.0

- rector/rector: ^2.0

- symfony/cache: ^5.4 || ^6.4 || ^7.0

- symfony/process: ^5.0|^6.0|^7.0

- tomasvotruba/type-coverage: ^2.0

- typesense/typesense-php: ^5.0

Suggests

- aws/aws-sdk-php: ^3.209

- doctrine/orm: ^2.20

- elasticsearch/elasticsearch: ^7.0|^8.0

- php-http/curl-client: ^2.3

- symfony/process: ^4.0|^5.0|^6.0|^7.0

- typesense/typesense-php: ^5.0

- 2.x-dev

- 2.2.2

- 2.2.1

- 2.2.0

- 2.1.1

- 2.1.0

- 2.0.8

- 2.0.7

- 2.0.6

- 2.0.5

- 2.0.4

- 2.0.3

- 2.0.2

- 2.0.1

- 2.0.0

- 1.x-dev

- 1.17.5

- 1.17.4

- 1.17.3

- 1.17.2

- 1.17.1

- 1.17.0

- 1.16.23

- 1.16.22

- 1.16.21

- 1.16.20

- 1.16.19

- 1.16.18

- 1.16.17

- 1.16.16

- 1.16.15

- 1.16.14

- 1.16.13

- 1.16.12

- 1.16.11

- 1.16.10

- 1.16.9

- 1.16.8

- 1.16.7

- 1.16.6

- 1.16.5

- 1.16.4

- 1.16.3

- 1.16.2

- 1.16.1

- 1.16.0

- 1.15.22

- 1.15.21

- 1.15.20

- 1.15.19

- 1.15.18

- 1.15.17

- 1.15.16

- 1.15.15

- 1.15.14

- 1.15.13

- 1.15.12

- 1.15.11

- 1.15.10

- 1.15.9

- 1.15.8

- 1.15.7

- 1.15.6

- 1.15.5

- 1.15.4

- 1.15.3

- 1.15.2

- 1.15.1

- 1.15.0

- 1.14.29

- 1.14.28

- 1.14.27

- 1.14.26

- 1.14.25

- 1.14.24

- 1.14.23

- 1.14.22

- 1.14.21

- 1.14.20

- 1.14.19

- 1.14.18

- 1.14.17

- 1.14.16

- 1.14.15

- 1.14.14

- 1.14.13

- 1.14.12

- 1.14.11

- 1.14.10

- 1.14.9

- 1.14.8

- 1.14.7

- 1.14.6

- 1.14.5

- 1.14.4

- 1.14.3

- 1.14.2

- 1.14.1

- 1.14.0

- 1.13.3

- 1.13.2

- 1.13.1

- 1.13.0

- 1.12.18

- 1.12.17

- 1.12.16

- 1.12.15

- 1.12.14

- 1.12.13

- 1.12.12

- 1.12.11

- 1.12.10

- 1.12.9

- 1.12.8

- 1.12.7

- 1.12.6

- 1.12.5

- 1.12.4

- 1.12.3

- 1.12.2

- 1.12.1

- 1.12.0

- 1.11.10

- 1.11.9

- 1.11.8

- 1.11.7

- 1.11.6

- 1.11.5

- 1.11.4

- 1.11.3

- 1.11.2

- 1.11.1

- 1.11.0

- 1.10.20

- 1.10.19

- 1.10.18

- 1.10.17

- 1.10.16

- 1.10.15

- 1.10.14

- 1.10.13

- 1.10.12

- 1.10.11

- 1.10.10

- 1.10.9

- 1.10.8

- 1.10.7

- 1.10.6

- 1.10.5

- 1.10.4

- 1.10.3

- 1.10.2

- 1.10.1

- 1.10.0

- 1.9.37

- 1.9.36

- 1.9.35

- 1.9.34

- 1.9.33

- 1.9.32

- 1.9.31

- 1.9.30

- 1.9.29

- 1.9.28

- 1.9.27

- 1.9.26

- 1.9.25

- 1.9.24

- 1.9.23

- 1.9.22

- 1.9.21

- 1.9.20

- 1.9.19

- 1.9.18

- 1.9.17

- 1.9.16

- 1.9.15

- 1.9.14

- 1.9.13

- 1.9.12

- 1.9.11

- 1.9.10

- 1.9.9

- 1.9.8

- 1.9.7

- 1.9.6

- 1.9.5

- 1.9.4

- 1.9.3

- 1.9.2

- 1.9.1

- 1.9.0

- 1.8.19

- 1.8.18

- 1.8.17

- 1.8.16

- 1.8.15

- 1.8.14

- 1.8.13

- 1.8.12

- 1.8.11

- 1.8.10

- 1.8.9

- 1.8.8

- 1.8.7

- 1.8.6

- 1.8.5

- 1.8.4

- 1.8.3

- 1.8.2

- 1.8.1

- 1.8.0

- 1.7.2

- 1.7.1

- 1.7.0

- 1.6.0

- 1.5.4

- 1.5.3

- 1.5.2

- 1.5.1

- 1.5.0

- 1.4.2

- 1.4.1

- 1.4.0

- 1.3.2

- 1.3.1

- 1.3.0

- 1.2.25

- 1.2.24

- 1.2.23

- 1.2.22

- 1.2.21

- 1.2.20

- 1.2.19

- 1.2.18

- 1.2.17

- 1.2.16

- 1.2.15

- 1.2.14

- 1.2.13

- 1.2.12

- 1.2.11

- 1.2.10

- 1.2.9

- 1.2.8

- 1.2.7

- 1.2.6

- 1.2.5

- 1.2.4

- 1.2.3

- 1.2.2

- 1.2.1

- 1.2.0

- 1.1.3

- 1.1.2

- 1.1.1

- 1.1.0

- 1.0.1

- 1.0.0

- dev-main

- dev-retrieval

- dev-openai-responses-api

- dev-mcp-http

This package is auto-updated.

Last update: 2025-09-12 17:22:13 UTC

README

Warning

🚨 IMPORTANT: Repository Migration Notice

Effective October 1st, 2025, the official Neuron repository will be migrating from the Inspector GitHub organization to a dedicated Neuron organization.

For detailed migration instructions and configuration updates, please visit our Repository Migration Guide.

Create Full-Featured Agentic Applications in PHP

Before moving on, support the community giving a GitHub star ⭐️. Thank you!

What is Neuron?

Neuron is a PHP framework for creating and orchestrating AI Agents. It allows you to integrate AI entities in your existing PHP applications with a powerful and flexible architecture. We provide tools for the entire agentic application development lifecycle, from LLM interfaces, to data loading, to multi-agent orchestration, to monitoring and debugging. In addition, we provide tutorials and other educational content to help you get started using AI Agents in your projects.

Requirements

- PHP: ^8.1

Official documentation

Go to the official documentation

Guides & Tutorials

Check out the technical guides and tutorials archive to learn how to start creating your AI Agents with Neuron https://docs.neuron-ai.dev/resources/guides-and-tutorials.

How To

- Install

- Create an Agent

- Talk to the Agent

- Monitoring

- Supported LLM Providers

- Tools & Toolkits

- MCP Connector

- Structured Output

- RAG

- Workflow

- Official Documentation

Install

Install the latest version of the package:

composer require inspector-apm/neuron-ai

Create an Agent

Neuron provides you with the Agent class you can extend to inherit the main features of the framework and create fully functional agents. This class automatically manages some advanced mechanisms for you, such as memory, tools and function calls, up to the RAG systems. You can go deeper into these aspects in the documentation.

Let's create an Agent with the command below:

php vendor/bin/neuron make:agent DataAnalystAgent

<?php namespace App\Neuron; use NeuronAI\Agent; use NeuronAI\SystemPrompt; use NeuronAI\Providers\AIProviderInterface; use NeuronAI\Providers\Anthropic\Anthropic; class DataAnalystAgent extends Agent { protected function provider(): AIProviderInterface { return new Anthropic( key: 'ANTHROPIC_API_KEY', model: 'ANTHROPIC_MODEL', ); } protected function instructions(): string { return (string) new SystemPrompt( background: [ "You are a data analyst expert in creating reports from SQL databases." ] ); } }

The SystemPrompt class is designed to take your base instructions and build a consistent prompt for the underlying model

reducing the effort for prompt engineering.

Talk to the Agent

Send a prompt to the agent to get a response from the underlying LLM:

$agent = DataAnalystAgent::make(); $response = $agent->chat( new UserMessage("Hi, I'm Valerio. Who are you?") ); echo $response->getContent(); // I'm a data analyst. How can I help you today? $response = $agent->chat( new UserMessage("Do you remember my name?") ); echo $response->getContent(); // Your name is Valerio, as you said in your introduction.

As you can see in the example above, the Agent automatically has memory of the ongoing conversation. Learn more about memory in the documentation.

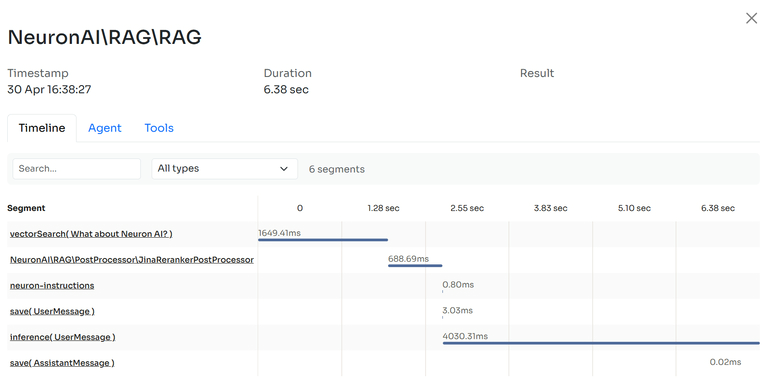

Monitoring & Debugging

Integrating AI Agents into your application you’re not working only with functions and deterministic code, you program your agent also influencing probability distributions. Same input ≠ output. That means reproducibility, versioning, and debugging become real problems.

Many of the Agents you build with Neuron will contain multiple steps with multiple invocations of LLM calls, tool usage, access to external memories, etc. As these applications get more and more complex, it becomes crucial to be able to inspect what exactly your agent is doing and why.

Why is the model taking certain decisions? What data is the model reacting to? Prompting is not programming in the common sense. No static types, small changes break output, long prompts cost latency, and no two models behave exactly the same with the same prompt.

The best way to take your AI application under control is with Inspector. After you sign up,

make sure to set the INSPECTOR_INGESTION_KEY variable in the application environment file to start monitoring:

INSPECTOR_INGESTION_KEY=fwe45gtxxxxxxxxxxxxxxxxxxxxxxxxxxxx

After configuring the environment variable, you will see the agent execution timeline in your Inspector dashboard.

Learn more about Monitoring in the documentation.

Supported LLM Providers

With Neuron, you can switch between LLM providers with just one line of code, without any impact on your agent implementation. Supported providers:

- Anthropic

- OpenAI (also as an embeddings provider)

- OpenAI on Azure

- Ollama (also as an embeddings provider)

- OpenAILike

- Gemini (also as an embeddings provider)

- Mistral

- HuggingFace

- Deepseek

- Grok

- AWS Bedrock Runtime

Tools & Toolkits

Make your agent able to perform concrete tasks, like reading from a database, by adding tools or toolkits (collections of tools).

<?php namespace App\Neuron; use NeuronAI\Agent; use NeuronAI\Providers\AIProviderInterface; use NeuronAI\Providers\Anthropic\Anthropic; use NeuronAI\SystemPrompt; use NeuronAI\Tools\ToolProperty; use NeuronAI\Tools\Tool; use NeuronAI\Tools\Toolkits\MySQL\MySQLToolkit; class DataAnalystAgent extends Agent { protected function provider(): AIProviderInterface { return new Anthropic( key: 'ANTHROPIC_API_KEY', model: 'ANTHROPIC_MODEL', ); } protected function instructions(): string { return (string) new SystemPrompt( background: [ "You are a data analyst expert in creating reports from SQL databases." ] ); } protected function tools(): array { return [ MySQLToolkit:make( \DB::connection()->getPdo() ), ]; } }

Ask the agent something about your database:

$response = DataAnalystAgent::make()->chat( new UserMessage("How many orders we received today?") ); echo $response->getContent();

Learn more about Tools in the documentation.

MCP Connector

Instead of implementing tools manually, you can connect tools exposed by an MCP server with the McpConnector component:

<?php namespace App\Neuron; use NeuronAI\Agent; use NeuronAI\MCP\McpConnector; use NeuronAI\Providers\AIProviderInterface; use NeuronAI\Providers\Anthropic\Anthropic; use NeuronAI\Tools\ToolProperty; use NeuronAI\Tools\Tool; class DataAnalystAgent extends Agent { protected function provider(): AIProviderInterface { ... } protected function instructions(): string { ... } protected function tools(): array { return [ // Connect to an MCP server ...McpConnector::make([ 'command' => 'npx', 'args' => ['-y', '@modelcontextprotocol/server-everything'], ])->tools(), ]; } }

Learn more about MCP connector in the documentation.

Structured Output

There are scenarios where you need Agents to understand natural language, but output in a structured format, like business processes automation, data extraction, etc. to use the output with some other downstream system.

use App\Neuron\MyAgent; use NeuronAI\Chat\Messages\UserMessage; use NeuronAI\StructuredOutput\SchemaProperty; /* * Define the output structure as a PHP class. */ class Person { #[SchemaProperty(description: 'The user name')] public string $name; #[SchemaProperty(description: 'What the user love to eat')] public string $preference; } // Talk to the agent requiring the structured output $person = MyAgent::make()->structured( new UserMessage("I'm John and I like pizza!"), Person::class ); echo $person->name ' like '.$person->preference; // John like pizza

Learn more about Structured Output on the documentation.

RAG

To create a RAG you need to attach some additional components other than the AI provider, such as a vector store,

and an embeddings provider.

Let's create a RAG with the command below:

php vendor/bin/neuron make:rag MyChatBot

Here is an example of a RAG implementation:

<?php namespace App\Neuron; use NeuronAI\Providers\AIProviderInterface; use NeuronAI\Providers\Anthropic\Anthropic; use NeuronAI\RAG\Embeddings\EmbeddingsProviderInterface; use NeuronAI\RAG\Embeddings\VoyageEmbeddingProvider; use NeuronAI\RAG\RAG; use NeuronAI\RAG\VectorStore\PineconeVectorStore; use NeuronAI\RAG\VectorStore\VectorStoreInterface; class MyChatBot extends RAG { protected function provider(): AIProviderInterface { return new Anthropic( key: 'ANTHROPIC_API_KEY', model: 'ANTHROPIC_MODEL', ); } protected function embeddings(): EmbeddingsProviderInterface { return new VoyageEmbeddingProvider( key: 'VOYAGE_API_KEY', model: 'VOYAGE_MODEL' ); } protected function vectorStore(): VectorStoreInterface { return new PineconeVectorStore( key: 'PINECONE_API_KEY', indexUrl: 'PINECONE_INDEX_URL' ); } }

Learn more about RAG in the documentation.

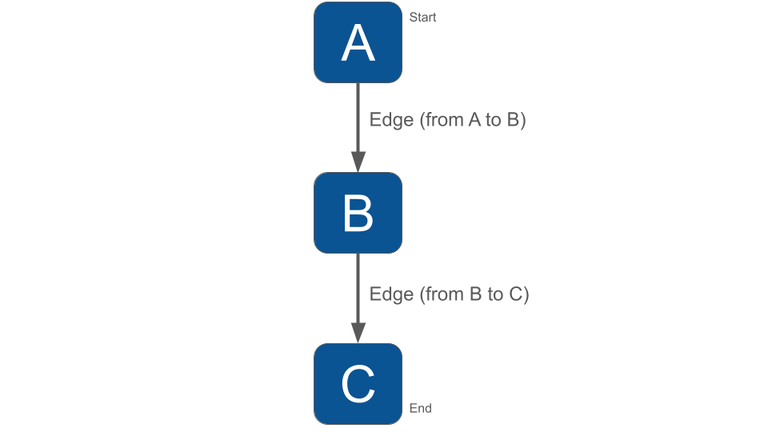

Workflow

Think of a Workflow as a smart flowchart for your AI applications. The idea behind Workflow is to allow developers to use all the Neuron components like AI providers, embeddings, data loaders, chat history, vector store, etc, as standalone components to create totally customized agentic entities.

Agent and RAG classes represent a ready to use implementation of the most common patterns when it comes to retrieval use cases, or tool calls, structured output, etc. Workflow allows you to program your agentic system completely from scratch. Agent and RAG can be used inside a Workflow to complete tasks as any other component if you need their built-in capabilities.

Neuron Workflow supports a robust human-in-the-loop pattern, enabling human intervention at any point in an automated process. This is especially useful in large language model (LLM)-driven applications where model output may require validation, correction, or additional context to complete the task.

Learn more about Workflow on the documentation.